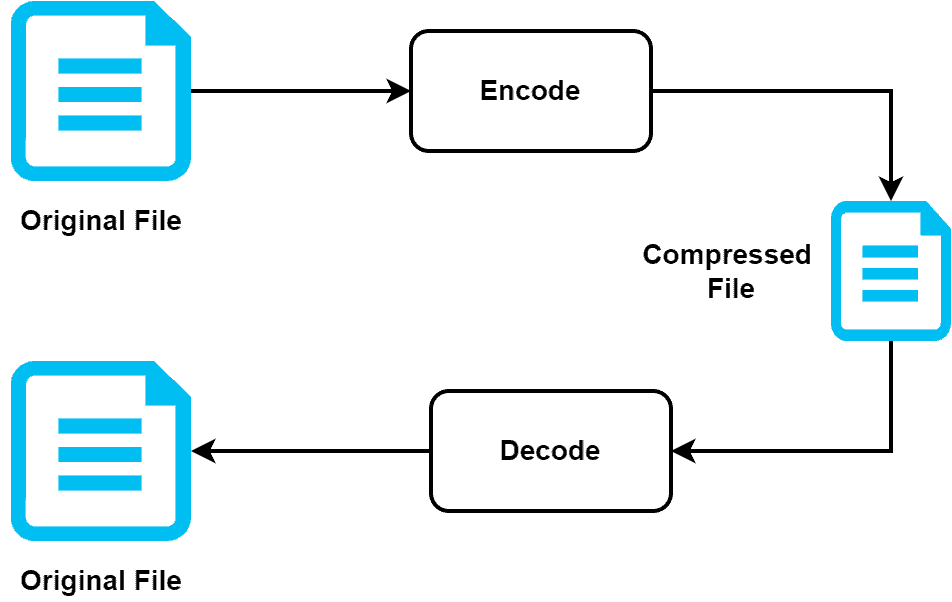

Compression algorithms are used to reduce the size of data files in order to save storage space, reduce transmission times, and improve efficiency in handling and processing the data. There are several types of compression algorithms, each with its own set of characteristics and trade-offs.

Lossless compression algorithms are designed to preserve the original data exactly, without any loss of information. These algorithms are typically used for data that needs to be preserved accurately, such as text files, financial records, and medical images. Some common lossless compression algorithms include Huffman coding, LZW (Lempel-Ziv-Welch), and DEFLATE (a combination of LZ77 and Huffman coding).

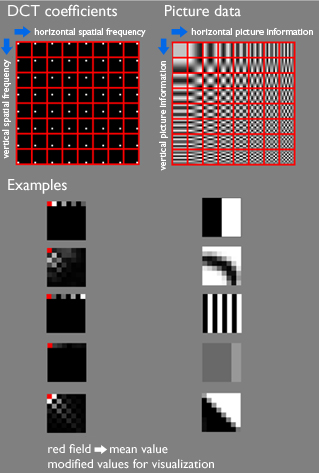

Lossy compression algorithms, on the other hand, sacrifice some level of accuracy in order to achieve higher levels of compression. These algorithms are often used for data that can tolerate some loss of quality, such as audio and video files. Some common lossy compression algorithms include MP3 (MPEG Audio Layer 3) and JPEG (Joint Photographic Experts Group).

Another type of compression algorithm is called dictionary-based compression, which works by creating a dictionary of common patterns in the data and replacing them with shorter codes. This type of compression is often used for text data and can be lossless or lossy, depending on the implementation. An example of a dictionary-based compression algorithm is LZ77 (Lempel-Ziv 1977).

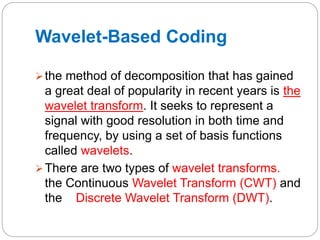

There are also hybrid compression algorithms that combine lossless and lossy techniques in order to achieve a balance between accuracy and efficiency. One example of a hybrid compression algorithm is JPEG 2000, which uses wavelet transformation and reversible quantization to achieve both high compression ratios and high image quality.

In summary, compression algorithms are essential tools for reducing the size of data files and improving efficiency in handling and processing the data. There are several types of compression algorithms, each with its own set of characteristics and trade-offs, including lossless, lossy, dictionary-based, and hybrid algorithms.